即刻App年轻人的同好社区

下载

家人们,北京见!还专门用Cursor给活动做了页面🥳

🎯 LinkLoud活动报名 | AI产品全球化增长策略

1月24日线下活动,探索:

🌍 多语种本地化策略

📈 商业化路径探索

👥 行业专家面对面

适合AI/SaaS创业者、产品经理、增长负责人

活动详情:mp.weixin.qq.com

🎯 LinkLoud活动报名 | AI产品全球化增长策略

1月24日线下活动,探索:

🌍 多语种本地化策略

📈 商业化路径探索

👥 行业专家面对面

适合AI/SaaS创业者、产品经理、增长负责人

活动详情:mp.weixin.qq.com

LinkLoud 2026年1月24日活动报名:AI与全球化增长策略 | Alignify

4 10

最近从Vercel后台看到防火墙挡了好多AI爬虫,找资料写了篇文章

Bot流量首次超过人类流量,占互联网流量的51%!解析搜索引擎爬虫与AI爬虫的区别,以及Perplexity、Bytespider等AI公司如何违反robots.txt协议秘密抓取内容。网站所有者必读的爬虫管理指南。

Bot流量首次超过人类流量,占互联网流量的51%!解析搜索引擎爬虫与AI爬虫的区别,以及Perplexity、Bytespider等AI公司如何违反robots.txt协议秘密抓取内容。网站所有者必读的爬虫管理指南。

网络爬虫完整指南:搜索引擎爬虫与AI爬虫详解 | Alignify

3 00

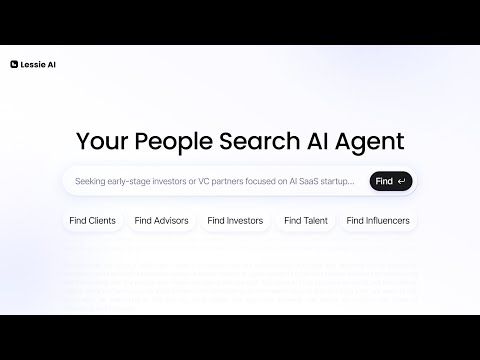

客户的产品,一月下正式launch,可以用来找红人(找其他人也可以)

Lessie AI:AI智能体搜索引擎(我想的Tagline:m.okjike.com),自动发现和联系红人、KOL,扫描100+平台,智能匹配,自动外联。

目前公测中,内测码在网页里(限250人),有需要自取

Lessie AI:AI智能体搜索引擎(我想的Tagline:m.okjike.com),自动发现和联系红人、KOL,扫描100+平台,智能匹配,自动外联。

目前公测中,内测码在网页里(限250人),有需要自取

精选AI产品公测列表:Lessie AI等创新工具 | Alignify

12 00

又发现了Cursor的妙用,有一个YC W24的客户,工作语言是英语

由于我英语巨差又不想献丑,直接让Cursor总结英语解决方案了

Solution: Block Indexing of business.xxx.com Pages

Problem: Pages under business.xxx.com consume crawl budget, preventing normal pages from being indexed (GSC shows "Discovered - currently not indexed").

Recommended Solution: Use both robots.txt and meta noindex

Option 1: robots.txt (Primary - Prevents Crawling)

Add to business.xxx.com/robots.txt:

User-agent: *

Disallow: /

Note: robots.txt prevents crawling but doesn't guarantee exclusion if pages are linked externally or in sitemaps. Use with meta noindex for complete blocking.

Option 2: Meta Noindex Tag (Secondary - Prevents Indexing)

Add to the <head> section of all pages under business.xxx.com:

<meta name="robots" content="noindex, nofollow">

Implementation: Add this meta tag in the layout/template for all business.xxx.com pages.

Why Both?

- robots.txt: Reduces crawl budget usage by preventing crawls

- meta noindex: Prevents indexing even if pages are discovered via external links

References:

- Google: Block search indexing with noindex - developers.google.com

- Google: Manage your crawl budget - developers.google.com

Action Items:

1. Create/update business.xxx.com/robots.txt with Disallow: /

2. Add <meta name="robots" content="noindex, nofollow"> to all business subdomain pages

3. Request removal in Google Search Console for faster de-indexing

这情绪价值也拉满,换成国内客户就直接和前端说别让这个子域名被谷歌索引了

由于我英语巨差又不想献丑,直接让Cursor总结英语解决方案了

Solution: Block Indexing of business.xxx.com Pages

Problem: Pages under business.xxx.com consume crawl budget, preventing normal pages from being indexed (GSC shows "Discovered - currently not indexed").

Recommended Solution: Use both robots.txt and meta noindex

Option 1: robots.txt (Primary - Prevents Crawling)

Add to business.xxx.com/robots.txt:

User-agent: *

Disallow: /

Note: robots.txt prevents crawling but doesn't guarantee exclusion if pages are linked externally or in sitemaps. Use with meta noindex for complete blocking.

Option 2: Meta Noindex Tag (Secondary - Prevents Indexing)

Add to the <head> section of all pages under business.xxx.com:

<meta name="robots" content="noindex, nofollow">

Implementation: Add this meta tag in the layout/template for all business.xxx.com pages.

Why Both?

- robots.txt: Reduces crawl budget usage by preventing crawls

- meta noindex: Prevents indexing even if pages are discovered via external links

References:

- Google: Block search indexing with noindex - developers.google.com

- Google: Manage your crawl budget - developers.google.com

Action Items:

1. Create/update business.xxx.com/robots.txt with Disallow: /

2. Add <meta name="robots" content="noindex, nofollow"> to all business subdomain pages

3. Request removal in Google Search Console for faster de-indexing

这情绪价值也拉满,换成国内客户就直接和前端说别让这个子域名被谷歌索引了

3 00

m.okjike.com食言了,写了30+篇AI相关的文章奖励自己一篇自己感兴趣的

—————————

GEO优化这个话题最近很热,但很多人可能不知道,目前市面上那些宣称能优化Visibility的工具,其实还是通过推送到Bing来间接让LLM获取内容。

今天在给网站接IndexNow的API,顺便查了资料,发现一个有意思的现象:

大部分第三方GEO索引工具(如IndexJump、Indexly、TrySight)都是从传统Search Indexing工具转型来的。比如TrySight就是IndexPilot的品牌重塑。

核心概念:Push (推送)vs Pull(拉取)索引

索引方式主要分两种:

Pull索引(传统方式):搜索引擎爬虫定期访问网站抓取内容,就像"搜索引擎主动来找你"。适合静态内容,但发现速度慢,可能需要几天甚至几周。

Push索引(现代方式):网站主动通知搜索引擎URL变化,就像"你主动告诉搜索引擎有新内容"。通过IndexNow或Indexing API实时推送,发现速度快,适合电商产品、新闻等实时更新内容。

核心观点:

目前很多GEO工具宣称能"Push网站内容来优化GEO",实际上还是通过推送到Bing,让LLM间接获取内容。这就像"你往大模型里输入品牌和网址,希望它记住你"是一样的(打个比方)

如果真的有原生的native AI索引方式(直接对接/推送给OpenAI、Anthropic等),会是一个大市场。真正的原生方式可能需要直接与AI搜索引擎建立合作关系,或者开发新的协议和标准。

实操建议:

立即使用IndexNow协议(微软和雅虎的开源协议,支持Bing、Naver、Yandex等)

使用Bing Indexing API推送内容到Bing

优化网站结构、语义清晰度,提升AI搜索引擎的理解和引用率

老生常谈但重要:

做好网站在搜索引擎中的可见性,这是基础。Push索引能加快发现速度,但内容质量和结构优化才是根本。

这篇文章应该是全网唯一关于AI Indexing的分析和猜想,也提供了可实操的步骤。如果你在做GEO优化,建议看看。

—————————

GEO优化这个话题最近很热,但很多人可能不知道,目前市面上那些宣称能优化Visibility的工具,其实还是通过推送到Bing来间接让LLM获取内容。

今天在给网站接IndexNow的API,顺便查了资料,发现一个有意思的现象:

大部分第三方GEO索引工具(如IndexJump、Indexly、TrySight)都是从传统Search Indexing工具转型来的。比如TrySight就是IndexPilot的品牌重塑。

核心概念:Push (推送)vs Pull(拉取)索引

索引方式主要分两种:

Pull索引(传统方式):搜索引擎爬虫定期访问网站抓取内容,就像"搜索引擎主动来找你"。适合静态内容,但发现速度慢,可能需要几天甚至几周。

Push索引(现代方式):网站主动通知搜索引擎URL变化,就像"你主动告诉搜索引擎有新内容"。通过IndexNow或Indexing API实时推送,发现速度快,适合电商产品、新闻等实时更新内容。

核心观点:

目前很多GEO工具宣称能"Push网站内容来优化GEO",实际上还是通过推送到Bing,让LLM间接获取内容。这就像"你往大模型里输入品牌和网址,希望它记住你"是一样的(打个比方)

如果真的有原生的native AI索引方式(直接对接/推送给OpenAI、Anthropic等),会是一个大市场。真正的原生方式可能需要直接与AI搜索引擎建立合作关系,或者开发新的协议和标准。

实操建议:

立即使用IndexNow协议(微软和雅虎的开源协议,支持Bing、Naver、Yandex等)

使用Bing Indexing API推送内容到Bing

优化网站结构、语义清晰度,提升AI搜索引擎的理解和引用率

老生常谈但重要:

做好网站在搜索引擎中的可见性,这是基础。Push索引能加快发现速度,但内容质量和结构优化才是根本。

这篇文章应该是全网唯一关于AI Indexing的分析和猜想,也提供了可实操的步骤。如果你在做GEO优化,建议看看。

搜索引擎索引工具:Push/Pull索引、IndexNow、GEO优化完整指南 | Alignify

9 14

想通了这件事一下生活就轻松了,每天写自己喜欢看的内容没用(只能满足自己的写作需求和情绪价值,写起来还累),得写客户看的和客户的客户会看的

做产品也是一样,闷头做个满足自己情绪价值的产品必然很难赚钱

做产品也是一样,闷头做个满足自己情绪价值的产品必然很难赚钱

Kostja: 如果我真的要靠SEO变现,就不应该帮甲方做Blog,而是直接把内容发在自己站内,让甲方按CPC付钱走广告竞价逻辑(既然他们更喜欢直接交付结果) 下周开始不写增长分享了,先写几天AI内容

8 00

现在每天的工作状态be like

在躺椅上刷抖音监督Cursor干活

每月要发的工资(Lovable400刀,Cursor200刀,Notion24刀,Lovart32刀,Vercel20刀)

在躺椅上刷抖音监督Cursor干活

每月要发的工资(Lovable400刀,Cursor200刀,Notion24刀,Lovart32刀,Vercel20刀)

11 31

分享下我是怎么做内容和增长,而且越做越快的

我文章的Meta Tag,导航菜单,语义化URL,面包屑,站点地图,网站结构,Contextual Internal Links,分类页面所有SEO相关的Item都是AI自动化生成的

那怎么用Cursor生成呢?我直接让Cursor读我写的对应文章并配置规则:Meta Tag alignify.co,导航菜单alignify.co,语义化URL alignify.co,面包屑alignify.co ,导航菜单alignify.co,站点地图alignify.co,网站结构alignify.co,Contextual Internal Links alignify.co,分类页面alignify.co,如果产出的内容不符合预期,我就找问题和资料,反过来再补充进文章再用Cursor跑一遍,等什么时候生成的效果符合预期,那文章也写完了;换句话说,我的文章还可以用来做RAG:用自己网站中已发布的相关文章内容作为上下文,注入到 AI 的提示中,用于优化或生成对应主题的新内容

内容会带来增长,加快高质量内容创作就是做增长;增长的内容也会带来增长

我文章的Meta Tag,导航菜单,语义化URL,面包屑,站点地图,网站结构,Contextual Internal Links,分类页面所有SEO相关的Item都是AI自动化生成的

那怎么用Cursor生成呢?我直接让Cursor读我写的对应文章并配置规则:Meta Tag alignify.co,导航菜单alignify.co,语义化URL alignify.co,面包屑alignify.co ,导航菜单alignify.co,站点地图alignify.co,网站结构alignify.co,Contextual Internal Links alignify.co,分类页面alignify.co,如果产出的内容不符合预期,我就找问题和资料,反过来再补充进文章再用Cursor跑一遍,等什么时候生成的效果符合预期,那文章也写完了;换句话说,我的文章还可以用来做RAG:用自己网站中已发布的相关文章内容作为上下文,注入到 AI 的提示中,用于优化或生成对应主题的新内容

内容会带来增长,加快高质量内容创作就是做增长;增长的内容也会带来增长

Kostja: 家人们谁懂啊,人在无语的时候真想笑 Coding的时候突然想到一个前端问题,懒得用AI了,就去谷歌直接搜了下 结果出来第一篇就是我为了这个主题写的;不记得之前写过这个问题,所以肯定没有,最后还是用AI找的 <Link href> vs <a href> 的区别 1️⃣Next.js <Link> 组件 React 组件,用于客户端路由 在客户端渲染时转换为 <a> 标签 支持预取(prefetching) 在 SSR 时会渲染为 <a> 标签 2️⃣HTML <a> 标签 标准 HTML 元素 直接可被搜索引擎抓取 无需 JavaScript 即可工作 SEO 友好性对比 3️⃣Next.js Link 组件(SSR 场景) 在 SSR 时会被渲染为 <a> 标签,搜索引擎可以抓取 如果使用纯客户端渲染(CSR),可能无法被正确抓取 4️⃣HTML <a> 标签 最直接、最可靠的方式 搜索引擎可直接抓取,无需 JavaScript 符合你的 HTML a 标签页面要求 最后:出于保险还是选择了HTML <a> 标签;最最重要的是:1. 我把这部分也加进了文章,下一个幸运儿遇见这个问题就不用再问AI了;2. 顺便把这部分加入了cursor的规则

11 04